Exhibited at ICC (2022)

ABOUT

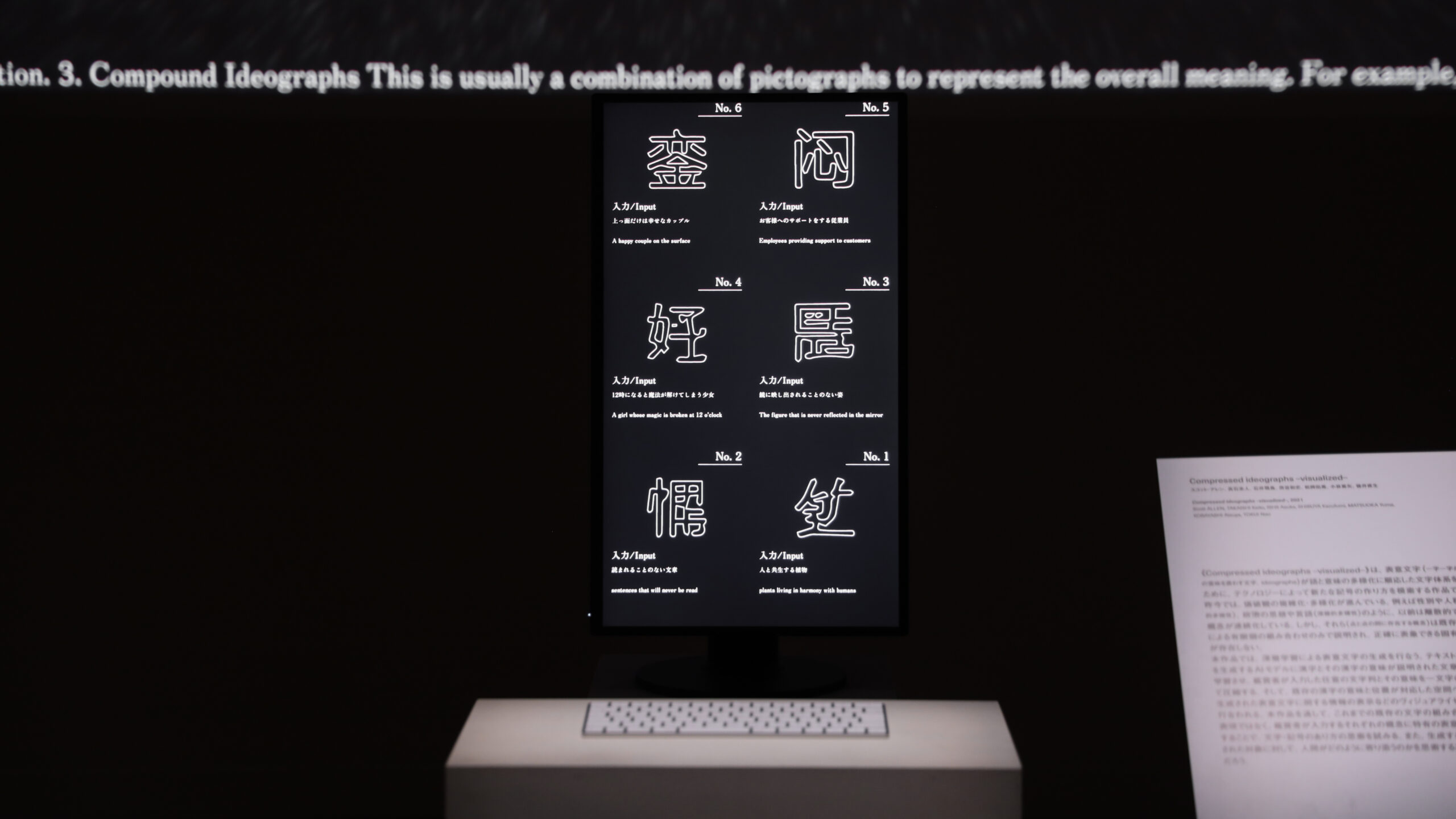

This work is an interactive installation that allows viewers to experience the creation and visualization of kanji characters by actually inputting characters in a new series of kanji characters named “Compressed ideographs”, which are created by DALL-E, a deep learning model that differs from any of the methods used to create the six kanji characters (hieroglyphs, fingerspelling, kaiyi, phonetic, transcriptions, and pseudonyms) created in history.

Since the second century, kanji characters have been created and classified into six categories (Rikusho) according to their origins. Kanji characters themselves are still being created for newly discovered elements, for example, but they are created by people using existing methods. In today’s increasingly complex and diverse world, is it possible to explain the world using only kanji characters created using conventional methods? In this work, we used a deep learning model to create a seventh category, which we named “Compressed ideographs”, which can be applied to any text.

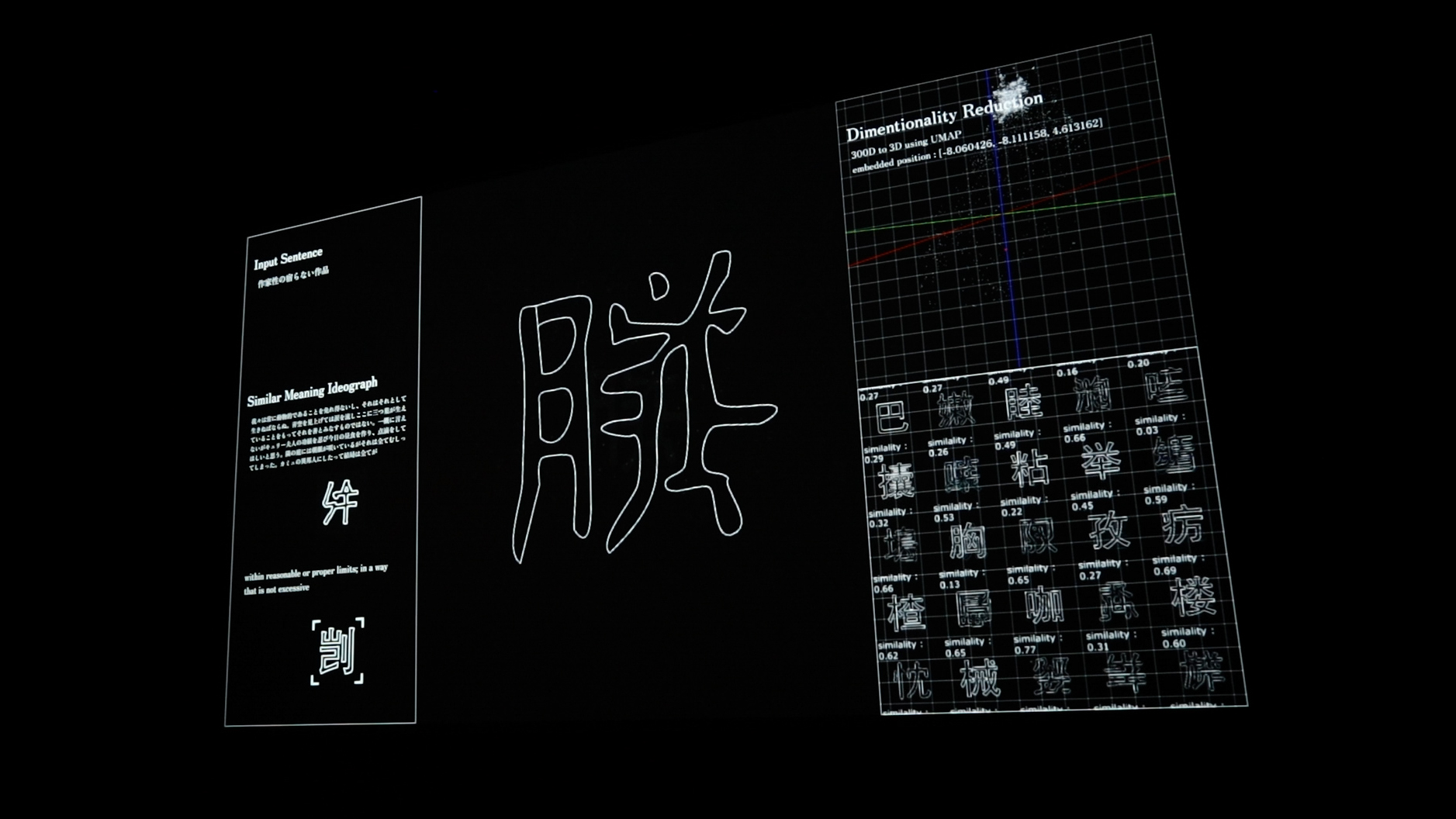

We used a transformer model called DALL-E to generate the kanji characters, and the authors trained it on a large number of pairs of kanji characters and sentences describing the meaning of the kanji characters. In this way, for any string or sentence input by the viewer, a kanji character is generated that is compressed into a single character. At the same time, arbitrary character strings and sentences entered by the viewer are vectorized into 300 dimensions by the Doc2Vec model trained by the authors, and then their location in the 3D space created by the dimensionality reduction algorithm UMAP is calculated. The newly generated kanji characters are then placed together with a huge amount of existing kanji characters in a 3D space that represents the meaning of strings and sentences. In addition, the relationship between the two is visualized by displaying the kanji characters that are closest in meaning to the existing kanji characters, and by randomly displaying a large number of similarities to the existing kanji characters.

Through the experience of plotting kanji characters that reflect complex features in the meaning of the text by AI, viewers can explore the gap between characters that have been created and fixed by humans and those generated by AI.

Compressed ideographs -visualized-は,表意文字(1字1字が一定の意味を表す文字, ideographs)が語と意味の多様化に順応した文字体系を持つために,テクノロジーによって新たな記号の作り方を模索する作品です.

昨今では,価値観の複雑化・多様化が進んでいる.例えば性別や人種(表層的多様性),政治の思想や言語(深層的多様性)のように,以前は離散的であった概念が連続化しています.しかし,それら(点と点の間に存在する概念)は既存の漢字による有限個の組み合わせのみで説明され,正確に表象できる固有の記号が存在しません.

本作品では,深層学習による表意文字の生成を行なう.テキストから画像を生成するAIモデルに漢字とその漢字の意味が説明された文章のペアを学習させ,鑑賞者が入力した任意の文字列とその意味を1文字の漢字として圧縮します.そして,既存の漢字の意味と位置が対応した空間への配置や,生成された表意文字に関する情報の表示などのビジュアライゼーションが行われます.

本作品を通して,これまでの既存の文字の組み合わせによる表現ではなく,鑑賞者が入力するそれぞれの概念に特有の表意文字を生成することで,文字・記号のあり方の思索を試みます.また,生成する機械と生成された対象に対して,人間がどのように寄り添うのかを思索することにも通ずるかもしれません.

DATE CREATED

September 25th, 2021.

CREDIT

Scott Allen (Direction, Machine learning, Visual programming)

Keito Takaishi (Machine learning, Visual programming)

Asuka Ishii (Machine learning, Video)

Kazufumi Shibuya (Machine learning, Visual programming)

Yuma Matsuoka (Visual programming)

Atsuya Kobayashi (Sound)

Nao Tokui (Technical advice)

Thanks:

Keio University SFC Computational Creativity Lab (Nao Tokui Lab)

AWARD

やまなしメディア芸術アワード2021 優秀賞

アジアデジタルアート大賞展FUKUOKA 一般/インタラクティブアート部門 入賞

CONFERENCE

NeurIPS 2022 “Machine Learning for Creativity and Design”

VIDEO

Video by Asuka Ishii

Thanks:

Yuri Nakahashi (Cast)

NTT InterCommunication Center